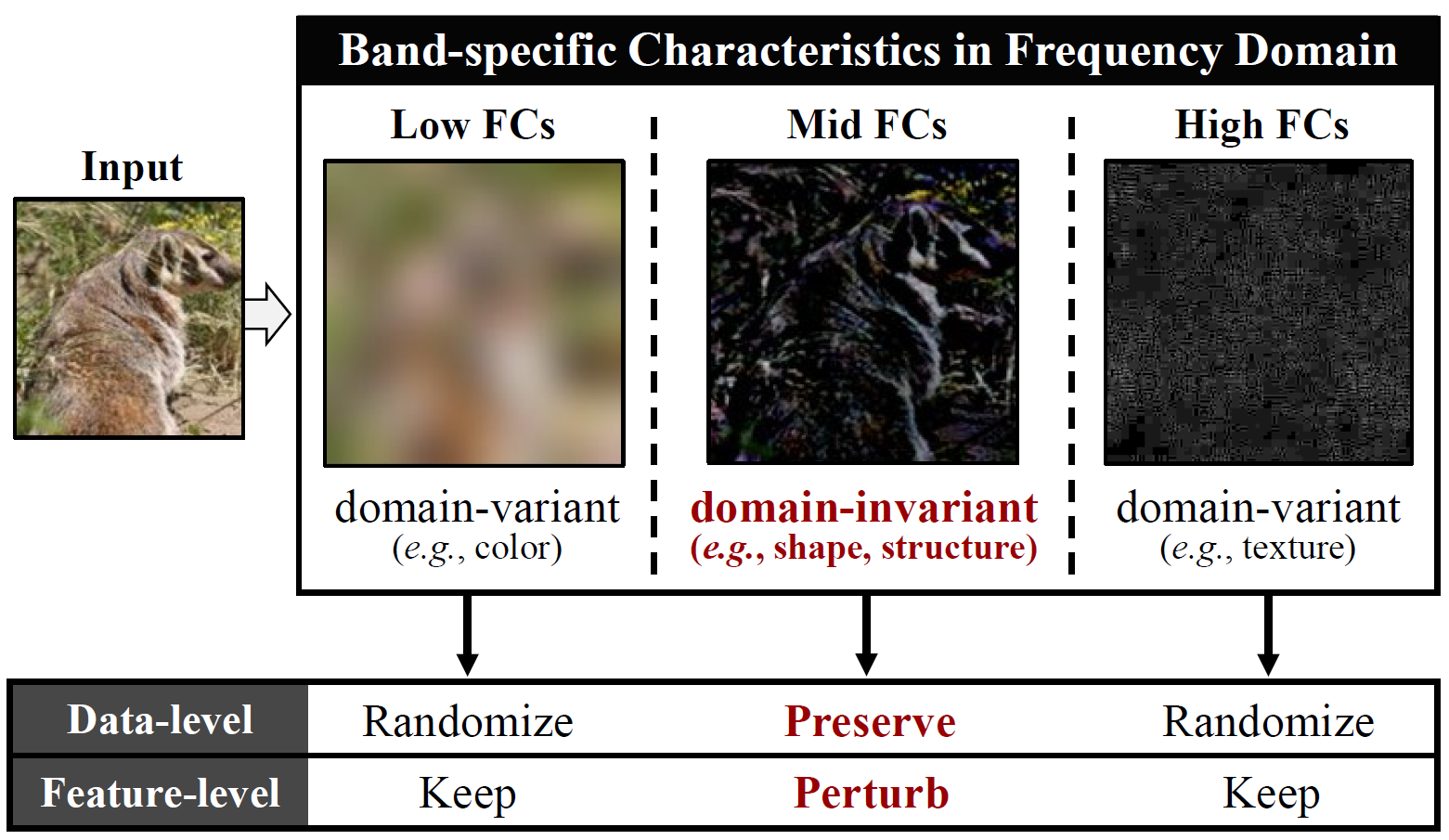

Deep neural networks are known to be vulnerable to security risks due to the inherent transferable nature of adversarial examples. Despite the success of recent generative model-based attacks demonstrating strong transferability, it still remains a challenge to design an efficient attack strategy in a real-world strict black-box setting, where both the target domain and model architectures are unknown. In this paper, we seek to explore a feature contrastive approach in the frequency domain to generate adversarial examples that are robust in both cross-domain and cross-model settings. With that goal in mind, we propose two modules that are only employed during the training phase: a Frequency-Aware Domain Randomization (FADR) module to randomize domain-variant low- and high-range frequency components and a Frequency-Augmented Contrastive Learning (FACL) module to effectively separate domain-invariant mid-frequency features of clean and perturbed image. We demonstrate strong transferability of our generated adversarial perturbations through extensive cross-domain and cross-model experiments, while keeping the inference time complexity.

Overview of FACL-Attack. From the clean input image, our FADR module outputs the augmented image after spectral transformation, which is targeted to randomize only the domain-variant low/high FCs. The perturbation generator then produces the bounded adversarial image with perturbation projector from the randomized image. The resulting clean and adversarial image pairs are decomposed into mid-band (domain-agnostic) and low/high-band (domain-specific) FCs, whose features extracted from the k-th middle layer of the surrogate model are contrasted in our FACL module to boost the adversarial transferability. The adversarial image is colorized for visualization.

Visualization of spectral transformation by FADR. From the clean input image (column 1), our FADR decomposes the image into mid-band (column 2) and low/high-band (column 3) FCs. The FADR only randomizes the domain-variant low/high-band FCs, yielding the augmented output in column 4. Here we demonstrate transformations with large hyper-parameters for visualization.

Difference map of perturbed features by FACL. Clean image, unbounded adversarial images from baseline and FACL, and the final difference map (Diff(baseline, baseline+FACL)), from left to right. Our generated perturbations are more focused on domain-agnostic semantic region such as shape, facilitating more transferable attack.

Cross-domain evaluation results. The perturbation generator is trained on ImageNet-1K with VGG-16 as the surrogate model and evaluated on black-box domains with models. We compare the top-1 classification accuracy after attacks.

Cross-model evaluation results. The perturbation generator is trained on ImageNet-1K with VGG-16 as the surrogate model and evaluated on black-box models. We compare the top-1 classification accuracy after attacks.

Evaluation on the state-of-the-art models. The perturbation generator is trained on ImageNet-1K with VGG-16 as the surrogate model and evaluated on black-box models. We compare the top-1 classification accuracy after attacks.

Qualitative results on various domains. FACL-Attack successfully fools the classifier, causing it to predict the clean image labels (in black) as the mispredicted class labels shown at the bottom (in red). From top to bottom: clean images, unbounded adversarial images, and bounded adversarial images which are actual inputs to the classifier.

FACL-Attack (facl.attack@gmail.com)

@InProceedings{yang2024FACLAttack,

title={Frequency-Aware Contrastive Learning for Transferable Adversarial Attacks},

author={Hunmin Yang and Jongoh Jeong and Kuk-Jin Yoon},

booktitle={AAAI},

year={2024}

}